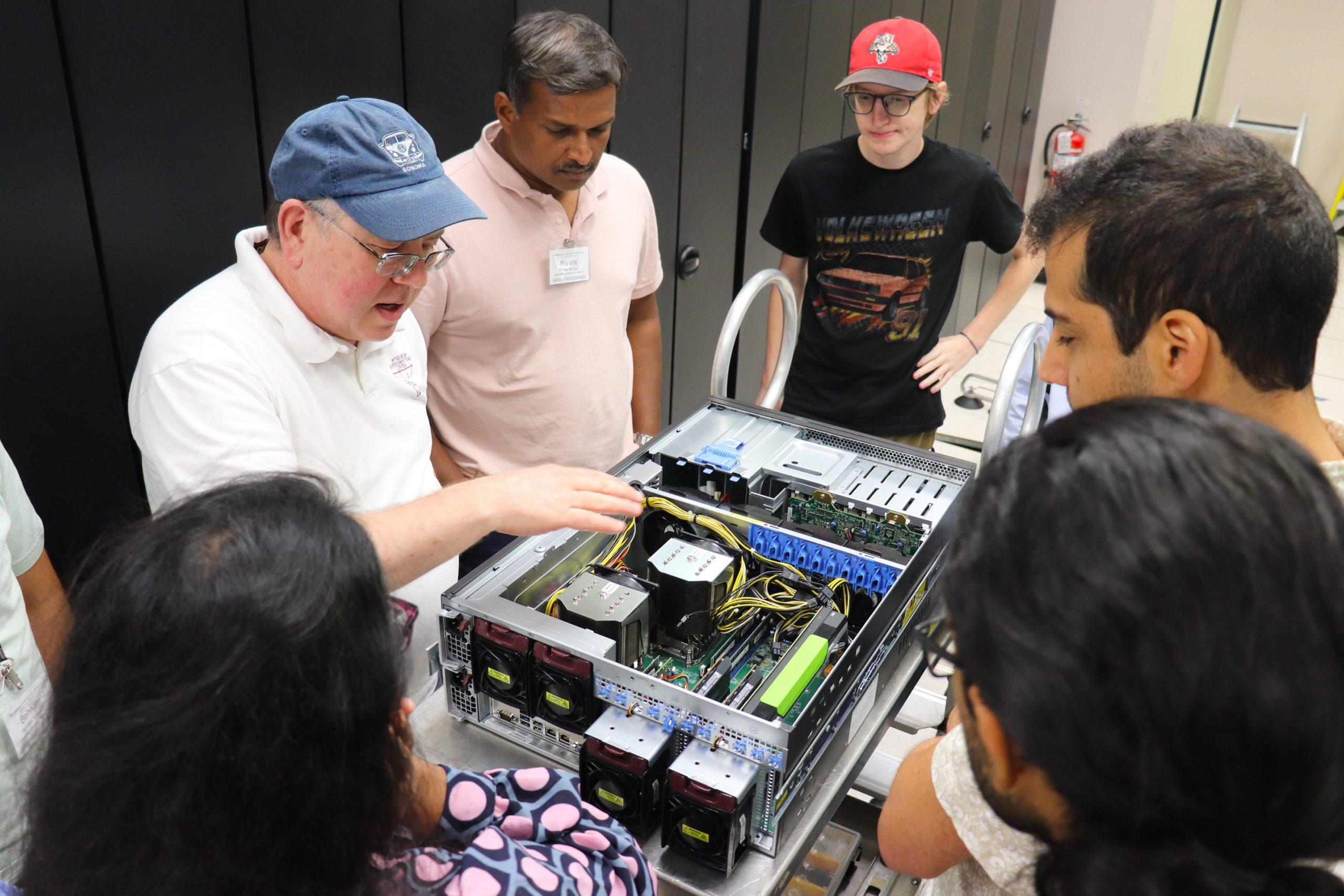

ByteBoost students and PSC staff listen as Derek Simmel, Senior Information Security Officer, gives a tour of the PSC Machine Room.

Student Projects Tackle Challenges in Drug Discovery, Congressional Policy, Coordinating Heavy Air Traffic, and More

Students from across the U.S. gathered at PSC in August to present their artificial intelligence (AI) research projects as part of the 2024 ByteBoost Summer Workshop. These projects studied critical topics as diverse as engineering and discovering new drugs, understanding congressional policy outcomes, and safely controlling traffic for small, individually owned aircraft.

The ByteBoost program brought 24 students to deepen their knowledge and play with innovative technologies, such as Neocortex at PSC, ACES at Texas A&M University, and Ookami at Stony Brook University, cyberinfrastructure testbeds in the NSF’s ACCESS program. Students got a chance to attend deep technical presentations, run hands-on exercises, consult with experts, propose research projects, and visit the PSC machine room.

The ByteBoost bus on the way to tour the PSC Machine Room.

Congressional Policy Analysis using ML and HPCA

Team CivixLAB: Gogoal Falia, Texas A&M; Disha Ghoshal, Stony Brook University; Kundan Kumar, Iowa State University; Aakashdeep Sil, Texas A&M University (Mentors: Dana O’Connor and Paola Buitrago, PSC)

This team explored how policy making in the U.S. Congress evolved between 1973 and 2024, and how those changes reflected shifts in social challenges in the country. They chose Neocortex to pursue this project because of its capabilities with Big Data machine learning.

A major challenge for the project was the unconventional nature of the initial data. The team had to take public policy documents including tax, finance, health, and climate legislation, first classifying and then summarizing the bills so that they could be expressed as data that a machine learning algorithm could recognize.

The CivixLAB team successfully summarized the documents and generated data files that enabled them to begin pre-training their AI — the most massive, initial step of AI learning that depends on ingesting large amounts of data to understand them in a broad sense. Next the team would like to complete the pre-training step, fine tuning their AI to produce insights on how legislation and social developments interact with each other.

Bringing DeepPath to IPUs

Andrew Pang, Georgia Tech (Mentor: Zhenhua He, Texas A&M University)

This project used Texas A&M’s ACES system to explore transitional states in protein activity. Proteins control chemical reactions important to life processes to a large extent by making transitional states between reagents and the desired end products easier to reach. If scientists can better understand how proteins change their shapes and those of their reagents to favor desired transitional states, they can engineer the proteins to perform more useful tasks, both medically and scientificallys.

Pang deployed the DeepPath AI method for identifying transition states on ACES because of the system’s unique intelligence processing units (IPUs). Designed by the Graphcore company to handle AI learning tasks more efficiently than the current state-of-the-art graphics processing units (GPUs), these processors feature prominently in ACES, which itself was designed as a composable supercomputer that routes computations to the most efficient type of processor for each step of a computation.

The project successfully deployed the first step of DeepPath, called Energy Critic, on ACES. This step of the AI calculates the energy required for the protein to reach a given state, with lower-energy states being easier to reach. Future work would involve running the other steps of DeepPath — Explorer, which explores the likelihood of different states using Energy Critic, and Structure Builder, which predicts the larger-scale protein movements necessary to reach these states — and deploying DeepPath on other leading-edge AI computers, particularly PSC’s Neocortex system.

ML-Based Drug Discovery against Dengue Serotype 1 Virus Evaluated Using MD Simulations

Team ViroML: Aadhil Haq, Texas A&M University; Bernard Moussad, Virginia Tech; Eneye Ajayi, University of Southern California; Kamrun Nahar Keya, Iowa State University; Oriana Silva, University of North Texas (Mentor: Wes Brashear, Texas A&M University)

The ViroML team used ACES to explore possible new drugs to combat Dengue fever, a sometimes-deadly, mosquito-transmitted infection that affects 100 to 400 million people every year. Over 40 percent of the world’s population is at risk from Dengue.

The team’s strategy was to use AI to analyze known peptide molecules that stick to the Dengue virus’s DENV envelope protein, which the virus uses to attach to human cells. Using that information, they could generate yet-undiscovered peptides that are more effective at interfering with virus attachment and infection.

Running AI software that handled both the structure of the envelope protein and generated peptides to bind with it on ACES, the students successfully achieved generation of peptides and simulated docking between the protein and peptides. Some of their generated candidates were competitive with known blockers of one of the serotypes of the virus. Future work would include refining and optimizing their simulations to identify even better virus blockers, finding blockers for other serotypes of the virus, and using AI to automate discovery of larger numbers of viral blockers.

PSC’s Paola Buitrago, Director of Artificial Intelligence & Big Data, and Derek Simmel, Senior Information Security Officer, present at ByteBoost.

Optimizing Vertiport Networks with Mobile Location Data and Agent-Based Modeling

Team SkySync: Behnam Tahmasbi, University of Maryland, College Park; Caden Empey, University of Pittsburgh; Darshan Sarojini, University of California, San Diego; Farnoosh Roozkhosh, University of Georgia; Matthew Moreno, University of Michigan; Wenyu Wang, Ohio State University (Mentors: Eva Siegmann, Stony Brook University, and Zhenhua He, Texas A&M University)

The combination of AI and rotor-craft technologies developed for aerial drones has helped bring the prospect of making commonplace ownership of small aircraft feasible. The SkySync team analyzed how AI working on real-time data could be used to control networks of many small aircraft so that a crowded sky can be safe and efficient. They ran their agent-based simulations on ACES and Stony Brook University’s Ookami system.

Ookami features ARM processors — originally developed for smartphones and tablets — in a novel architecture designed to improve AI learning over GPU technology. Like ACES, its design is particularly friendly to installing and running the Conda environments the team members chose to employ. Initial results indicated that their model, which features AI-guided simulated aircraft, could calculate safe routes that brought each aircraft from origin to desired destination, with possible reductions in transportation costs and time.

Future work would include expanding the scale of their simulations to more densely populated areas, including airborne taxis. The team members would also like to enhance their techniques to make the vertiport networks more efficient and make the agents more complex and realistic, so their simulations are more accurate.

Sparse Matrix Operations

Team Cheetah: Omid Asudeh, University of Utah (Mentor: Eva Siegmann, Stony Brook University)

The Cheetah team explored sparse matrix operations in AI learning. Sparse matrices are data sets that have a lot of zero values. Many real-world applications for AI, such as large language models that underlie AI tools like ChatGPT, have this property. This is a challenge, because using computer storage and memory to handle a lot of zeros carries a huge computational cost. These problems benefit from compressing the data to store only the non-zero values, which promises huge improvements in speed and cost of AI operations. But such compression requires sophisticated computation.

Asudeh successfully deployed the TACO SpMM sparse matrix software on Ookami. He was able to explore the tool’s ability to handle compressed sparse matrix data effectively, despite changing a number of characteristics of the data.

Future work on the project would explore running other sparse matrix software on Ookami. Asudeh would also like to run the work on Ookami’s CPU and GPU nodes, to measure how much the advanced ARM nodes are accelerating the computations.

Performance Evaluation of Intelligent System for the Detection of Wild Animals in Nocturnal Period for Automobile Application in Multiple Systems

Yuvaraj Munian, Texas A&M University (Mentor: Dana O’Connor, PSC)

Munian used Neocortex to tackle the tricky task of detecting wildlife as it approaches an oncoming vehicle from the side, to prevent collisions. In the U.S., about 200 humans are killed and 26,000 are injured each year from collisions with wildlife, with more than $8 billion in property damage.

Neocortex was Munian’s system of choice for the work, as the computer’s architecture lent itself to rapid learning by the convolutional neural network (CNN) approach needed to rapidly classify and recognize animals at the side of the road. He trained the CNN on a visual data set in which animals had been identified. He is now ready to test it against data without labeling.

In addition to completing testing on Neocortex, Munian would like to repeat the work on ACES, to determine whether either of the two novel AI architectures provide faster learning.

Benchmarking Flash Attention 2/3 against Cerebras CS2

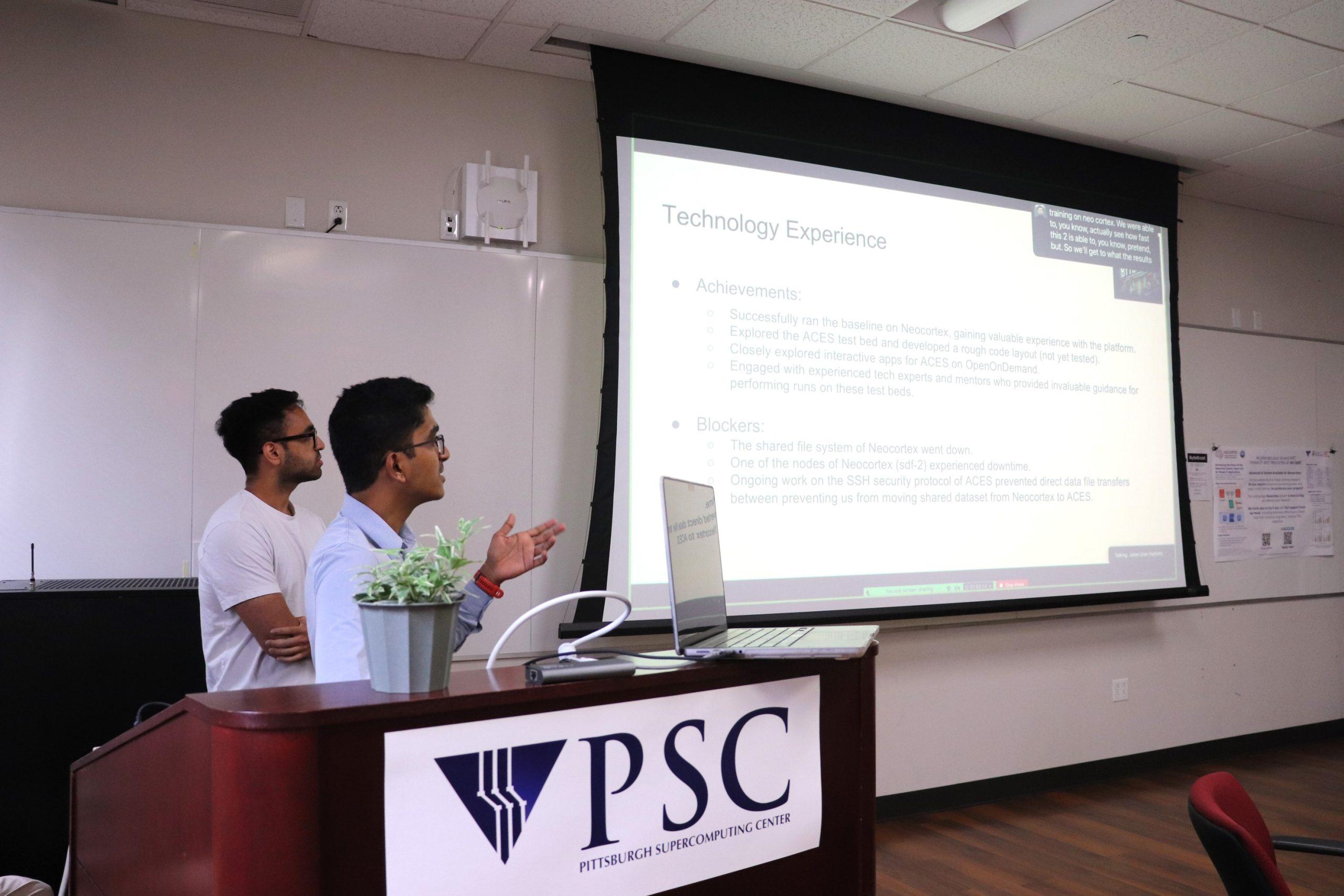

Ritvik Prabhu and Atharva Joshi present for Team FlashBert.

Team FlashBert: Atharva Joshi, University of Southern California, Ritvik Prabhu, Virginia Tech (Mentor: Mei-Yu Wang, PSC)

The FlashBert team focused on methods for making large language models (LLMs) more efficient and energy-saving by comparing them on PSC’s Neocortex and ACES machines. LLMs underlie some remarkable recent advances in AI, most notably in applications such as ChatGPT. But they are expensive to run in terms of the computing power needed. They are extremely energy-expensive as well.

The team first deployed their Flash Attention Model on Neocortex. The PSC system was built around two of the Cerebras company’s CS2 Wafer Scale Engines (WSEs), a new approach to AI that connects trillions of processors in a single, dinner-plate-sized wafer. In Neocortex, the WSEs are coordinated by a cutting edge, high-data-capacity HPE CPU server, providing enhanced ability to work with massive data. The arrangement holds promise in greatly accelerating communication between the processors, which in turn speeds AI learning. FlashBert’s aim was to then deploy on ACES, whose flash memory could potentially make some aspects of the learning even faster.

The team ran their code successfully on Neocortex. They also began developing the code as it would run on ACES. Future work would include finishing the ACES deployment and also testing a sparse neural network version on Ookami, whose architecture might offer similar performance with less power required.