By Kimberly Mann Bruch, SDSC Communications, and Ken Chiacchia, PSC

Virtual Dolphin Head Shows How Sound Moves through the Skull, Suggesting How Sounds May Offer Directional Cues for Navigation and Detection

Whales navigate, find food, and communicate over vast distances via sound. But we don’t really understand how they perceive and process the complex information embedded in incoming sound waves. Using simulations on PSC’s Bridges-2 supercomputer, a team from the University of Washington showed how different sounds from different directions move through a virtual dolphin’s head and provide directional cues.

WHY IT’S IMPORTANT

Humans are visual animals. Sure, we perceive our world using our senses of smell, touch, taste, and hearing. But we really do tend to trust our eyes most of all. For H. sapiens, “seeing is believing” is more than just an aphorism.

Not so for whales and dolphins — cetaceans — which need to scan the dark ocean depths for far greater distances than their eyes could ever see. Instead, they use high-frequency acoustic “pings” to navigate and find food underwater and in the air.

This is hard for us visual creatures to understand. Of course, we’ve used technology to develop detection by sound. It allows ships to scan the ocean ahead for obstacles. It also helps fishermen and oceanographers detect and observe fish and zooplankton. But we still need machines to translate that information into a picture we can see, as in a sonogram.

“The toothed whales’ ability to discriminate and recognize different targets using echolocation is unmatched by our engineered sonar systems at the moment. If we have a better understanding of how the whales achieve this, we can develop better instruments to observe animals in the ocean and understand how they respond to the changing climate.” — Wu-Jung Lee, University of Washington

Toothed whales — dolphins, sperm whales, and killer whales — have evolved remarkable abilities to communicate, hunt, and navigate using sound in diverse underwater environments. They face complex auditory scenes, from interacting with their own kind to detecting echoes bouncing off prey and underwater features. Yet, our understanding of how these whales perceive direction and the physical mechanisms behind it, including details like the frequency of the incoming sound, is limited.

Thanks to allocations from ACCESS, the NSF’s network of supercomputing resources in which PSC is a leading member, Wu-Jung Lee, a principal oceanographer at the University of Washington, and her postdoc YeonJoon Cheong acquired computing time on PSC’s flagship Bridges-2 system. The scientists used Bridges-2 to better understand how whales pick up directional signals from incoming sounds.

HOW PSC HELPED

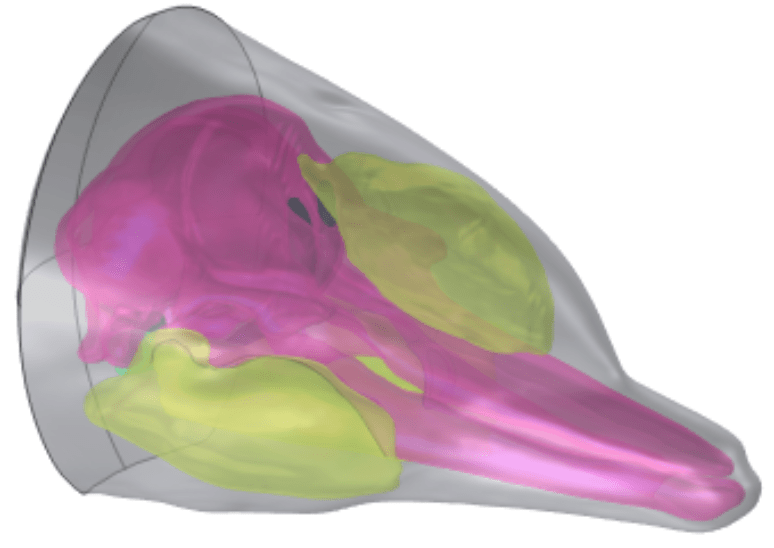

Three-dimensional volumetric representation of the head of a bottlenose dolphin, reconstructed using CT scans.

Using data from CT scans, Lee and Cheong created a virtual model of a dolphin’s head in Bridges-2. This allowed them to test how different components of the virtual head interact with sounds coming in from different directions before they are received at the dolphin’s ears.

Scientists knew that whales receive sound via their lower jaw, which guides the sound to the tympano-periotic complexes that surround the cochleae, the curling bone structures in the inner ear that then vibrate and pass along the signal to the auditory nerves. But how that setup conveys directional information wasn’t clear.

“From our previous experiences with an ACCESS Explore project, we found that access to the high-performance memory nodes on the Bridges-2 system was essential for our work. This is particularly true for simulating high-frequency sounds propagating in large whale heads, because of the vast number of model elements needed to analyze the sound’s interaction with biological structures in both time and frequency domains, which requires a high-performance computing resource like Bridges-2.” — Wu-Jung Lee, University of Washington

The team simulated different frequencies of sound coming toward the dolphin’s head from the left and the right. These simulated head-related transfer functions showed that the direction and the frequency of a given sound are both important.

Lee’s team presented their findings at the 184th Meeting of the Acoustical Society of America.

Future work measuring how real dolphins respond to different sounds will help validate the Bridges-2 simulation results. The results of such experiments, as well as upcoming refinement of the simulations, will also allow them to make more specific predictions about whale and dolphin echolocation signals. In addition to Bridges-2, the group has ACCESS allocations on Jetstream2 at Indiana University and the NSF’s Open Storage Network, which they’re using in ongoing projects to classify echoes from different fish schools recorded by echosounders as well as tracking bat activities in Seattle. They hope to share these findings soon in upcoming papers.